The Keyword in Anchor Text Factor Will Stay Low, But Won’t Decrease So Heavily: An Interview with Marcus Tober

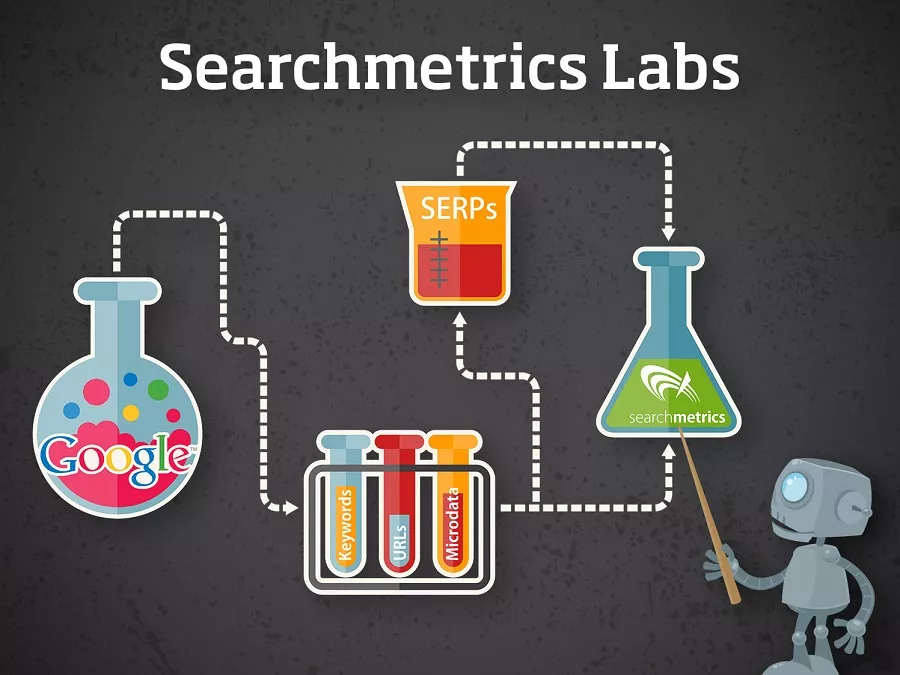

Searchmetrics Ranking Factors Study is the most anticipated SEO Survival Guide for millions of people concerned with search, content and online marketing. Marcus Tober, founder and CTO of Searchmetrics, told us about surprising results and the factors that set search trends in 2014.

— Thank you. Each year, we work really hard to prepare the study and improve our performance in doing so in order to provide a valuable information for the people. And indeed, there was one thing this year that has surprised me. When you look at the correlation values, you see that the correlation for backlinks having the keyword in the anchor text has increased compared to 2013. At first, I thought this must be a data error, because we all know that Google has devalued this factor not least in the course of the Penguin Update iterations. But when you look at the average values, it becomes clear that the actual share of those “keyword links” has decreased (more than 50% by the way). It’s just the differences between the positions that have changed. And that’s exactly why it is so important to not only look at the correlations, but at the average values at the same time.

— Since we have interpreted a strong relation (and this time I do not only mean “correlation”) between user signals and rankings, we plan to make it one of the constant factors, yes.

— One should be very careful when using the words “social signals”, “impact” and “Google rankings” in one and the same sentence. What we can say is that social signals show a very high correlation with good rankings. But there is always the question, what comes first: the good ranking or the social signals. It’s obvious, that relevant and appealing content attracts social signals. Furthermore, Google has always stated that they don’t use social signals as a ranking factor. From my perspective, social signals are at least a very good sign for search engines, where and what new (and maybe relevant) content is.

— The era of link farms is over. I’m not saying that some methods to create a certain link profile, mostly methods that are to be labeled wit the term “black hat SEO”, do not work anymore. Actually, some of these methods, like links from PBNs, do work surprisingly well – in the short term. Google stays a machine. And a machine works with algorithms – and these algorithms can be cheated in some way. In those days, it was pretty easy to achieve good rankings with the right methods. But from year to year, the possibilities to cheat on Google become smaller and smaller. What counts in the end for the majority of online businesses is a long term success. And you won’t reach this long term success with tactical methods, but with strategic approaches and relevant, holistic content providing the best possible user experience.

— The development of the factor “Keyword in Anchor text” has been negative over three years. As I already said, Google has devalued the influence of keyword links not least with the Penguin Updates. This is reflected in the data. In those days, keyword linking has been a very successful method to boost your ranking because the search engine considered sites with many links anchored on a certain keyword to be relevant for this keyword. In the course of defocusing the keyword as such (both regarding on- and offpage features) and rather taking whole topics into account, the importance of the factor “keyword” has strongly decreased. But of course, a certain share of keyword links is always natural. That’s why I think, this factor will stay low also in the future, but won’t decrease so heavily as in recent years.

— Yes. There is a different Flesch formula for each language, because every language is different. Sounds trivial, but that’s the way it is. The language-specific formula takes into account syllable and sentence length or word complexity for example. Language behave differently regarding inflection, morphology and/or syntax. That’s why different parameters are needed to analyze them. This results in a different scale value, which ranges from 0 to 100. For our study, we have used the Flesch formula for the English language also for German for example, because we focused on the correlation itself, not the scale value in detail.

— Not only SEO specialists but everybody who is concerned with search, content and online marketing should be familiar with the deep learning approach. This is one of the major developments of Google in its mission to evaluate and structure its search results according to content quality and semantics. The major buzz words in this context are word-co-occurrence-analyses and word semantics. With this kind of approach, Google has become pretty elaborate in discovering semantic relationships between single words and structuring them according to entities. At the same time, this approach is one of the major reasons for the development from single keywords to holistic topics.

— Content needs to be:

- Relevant;

- Holistic;

- Informative;

- User group / search intention specific;

- Provide an additional benefit for the user as well as a good user experience.

— Not necessarily. Definitely, visual content is on the rise. Images or videos often provide a good user experience. People just like nice pictures and/or entertaining or informative videos they can consume. But from my perspective, there is not really a discrepancy between visual content and text length. Both phenomena are able to coexist. Not least, they probably fulfill different search intentions and are a niche for themselves. The increase of text length has to be evaluated towards the background of word-co-occurrence and semantics. It doesn’t mean that you just have to write “more” content to rank better. The opposite is true. It won’t help you to create more and more content if it’s irrelevant. Irrelevant content generates bad user signals (time on site, bounce rate) and this would rather hurt your rankings. What we found out is, that content ranking on the top positions is longer on average. And this has rather to do with the structure of natural language, relevancy and the fulfillment of search intentions.

— I think, Google has made its point. People, who haven’t understood in which direction SEO, search, search engines and content marketing is developing should do their homework. Google is able both to understand the meaning behind queries and also behind content and to detect spam or irrelevant content. The recent Updates like Panda 4.1 or the Pirate Update are absolutely in line with Google's strategy to provide the most relevant result(s) for the user. That’s why we have seen many aggregators losing SEO Visibility (again). What has been new was the fact that updates are now rather a slow update. Panda 4.1 for examples has lasted 5-6 weeks until the data has settled. And some losers have lost every single week. On the other hand, some have lost a few weeks later, some have recovered already. I’m very curious if future updates will be of the same kind.

— 1-7: Searchmetrics :)

Read our interviews with SEO Sumos:

Related Articles

Display Advertising Effectiveness Analysis: A Comprehensive Approach to Measuring Its Impact

In this article, I will explain why you shouldn’t underestimate display advertising and how to analyze its impact using Google Analytics 4

Generative Engine Optimization: What Businesses Get From Ranking in SearchGPT

Companies that master SearchGPT SEO and generative engine optimization will capture high-intent traffic from users seeking direct, authoritative answers

From Generic to Iconic: 100 Statistics on Amazon Marketing for Fashion Brands

While traditional fashion retailers were still figuring out e-commerce, one company quietly revolutionized how U.S. consumers shop for everything from workout gear to wedding dresses