A/B Testing on Facebook: What Is It and How to Set Up an Ad Split Test?

If you have multiple ads for a marketing campaign, you may be wondering which ad will perform the best. It's impossible to say for sure before launching the campaign, so you need to test. A/B testing (split tests) is an approach that helps marketers test hypotheses and rely on data rather than intuition.

In paid advertising, A/B testing is a powerful tool for PPC specialists, as it allows them to test hypotheses and analyze user behavior in practice. Specialists can then make informed decisions about ad changes. In this post, I'm going to talk about A/B testing for Facebook ads.

What you need to know about A/B testing

A/B testing allows you to compare two advertising strategies by changing a specific element in your ad. You can change the ad creative, text, audience, or placement.

The system divides the target audience into two equal groups and shows them two different versions of a piece of

A typical A/B testing strategy looks like this:

- Define goals and hypotheses (how changing one parameter will affect the other).

- Set the indicators to evaluate performance.

- Test.

- Collect and validate data.

- Analyze the results and deploy changes.

Why test? First of all, even the most perfect ad can be improved. A/B testing often leads to changes that increase performance while keeping the same budget. Another reason to test is that the audience can get bored of the ad and find it no longer engaging. The testing helps improve user engagement without losing conversions.

How to plan A/B testing

Before testing, develop a hypothesis, which is essentially a specialist's vision of a change that can increase the number of conversions. It is very important to have a clear and specific hypothesis and relevant indicators to measure the success of the experiment.

You can test any ad element:

- Headline. Test different headlines to grab your audience's attention. You can experiment with the tone of voice and length of your headlines.

- Ad text. You can paraphrase calls to action in the ad text, add numbers, or personalize the ad for your audience.

- Ad creative. Change the image or video, and test out different text, music, colors, and backgrounds on creatives. It's worth testing different options to understand their effects on audience attention and engagement.

- Call to action. It makes sense to test different calls to action and emphasize, for example, urgency in your CTA (e.g., Buy Now).

- Target audience. You can test different demographics, interests, and behaviors to find out which audience responds best to your ad.

An important step in A/B testing planning is choosing the metrics to measure success. Clearly define what you want to achieve with the ad, such as the number of clicks, conversions, interactions, and so on. The selected metrics should be aligned with your business goals.

After the end of the testing period, analyze the effectiveness of each ad and choose the best one. You can focus on the following metrics:

- CTR (click-through rate). This indicator shows whether you have effectively identified the customer pain point and selected the right creative or call to action.

- CR (conversion rate). Assesses the extent to which the expectations of the user who clicked on the ad are met.

- CPA (cost per conversion). Measures the cost of targeted action.

- ROAS (return on ad spend). Measures the return on advertising.

A/B testing is a powerful tool for improving

Here are some recommendations on things to avoid during A/B testing:

- Don't change too many elements at once. If you change multiple elements of your ad at once, it will be difficult to determine which element caused the change in results. Change only one element per test.

- Avoid distorting the results. The audience should be randomly divided into control and experimental groups.

- Don't stop the test too early. Give each ad variant enough time to collect statistics. Changing too early can lead to incorrect conclusions.

- Don't stop at one successful test, even if it shows good results. It's better to run several tests and analyze data from multiple sources to confirm or deny the hypothesis.

- Analyze data under real-world conditions. It's important to consider the time, location, target audience, and competition. These are all factors that can affect the results.

How to do an A/B test

There are several ways to create A/B tests, depending on the variable you are trying to test and how you start creating the test. One of the most convenient ways to create an A/B test is to use the Meta Ads Manager dashboard, which uses an existing ad campaign or ad set as a template.

To use this dashboard, follow these steps:

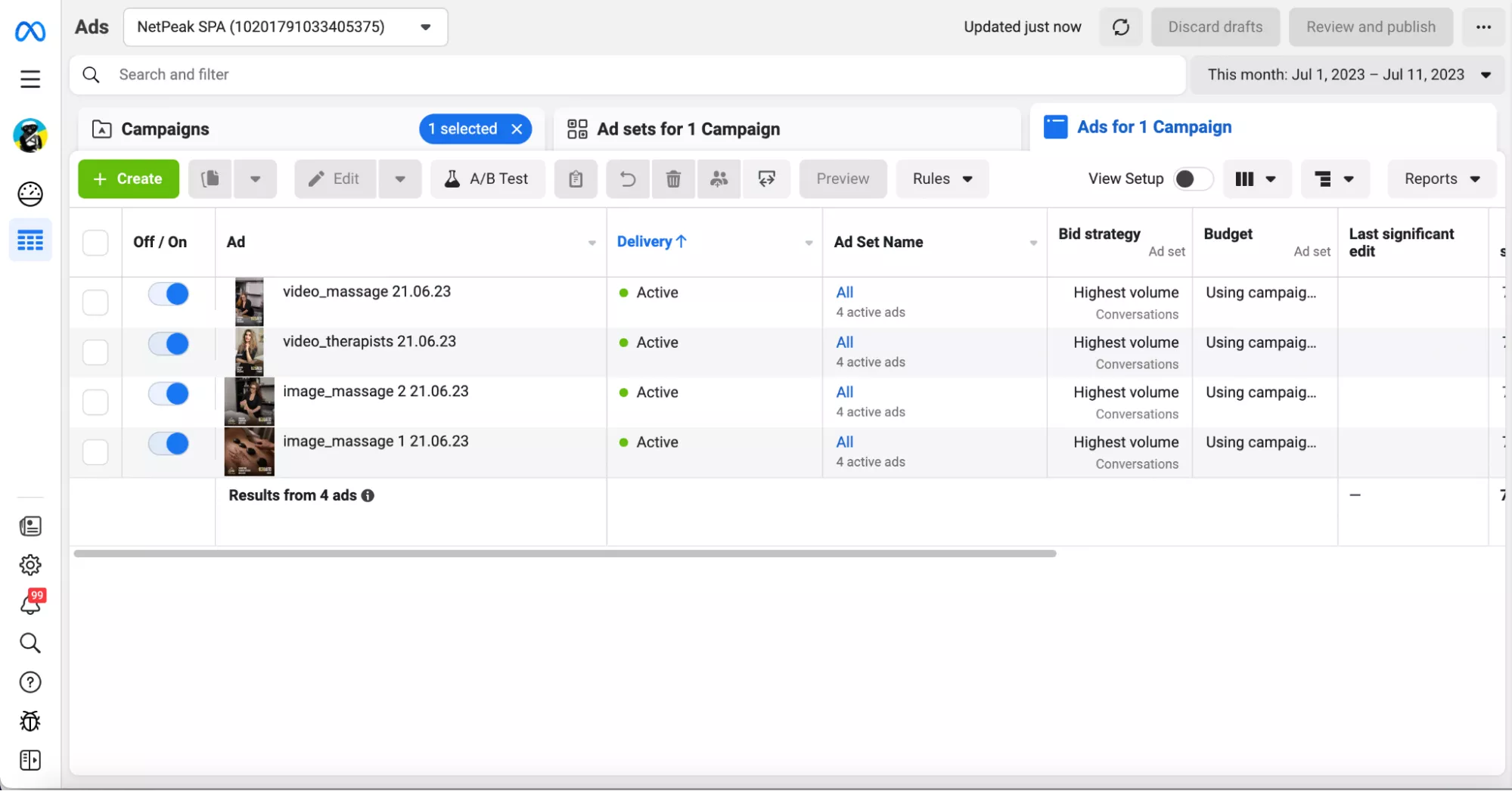

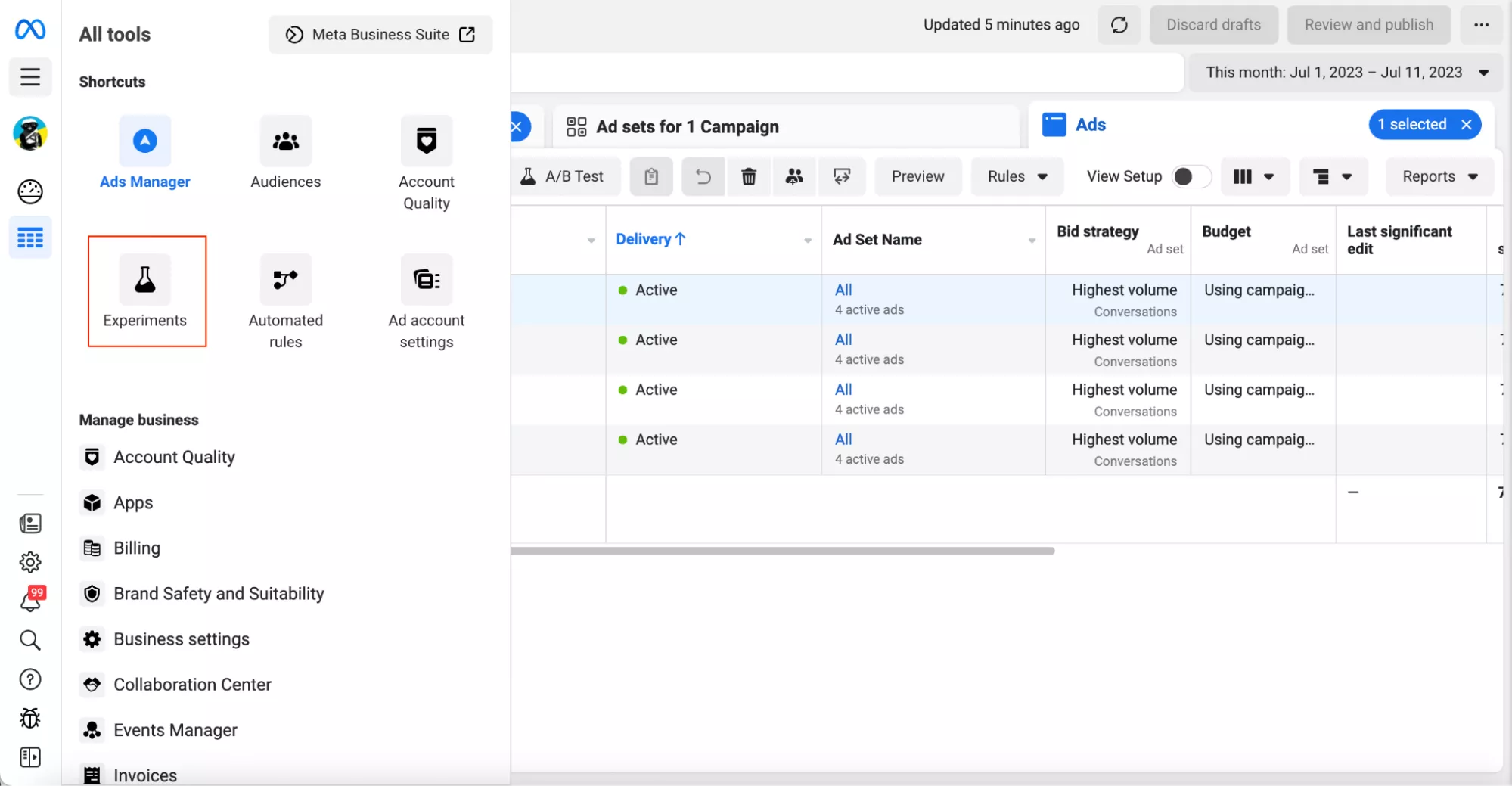

1. Go to the homepage where you can find available campaigns, ads, and ad sets.

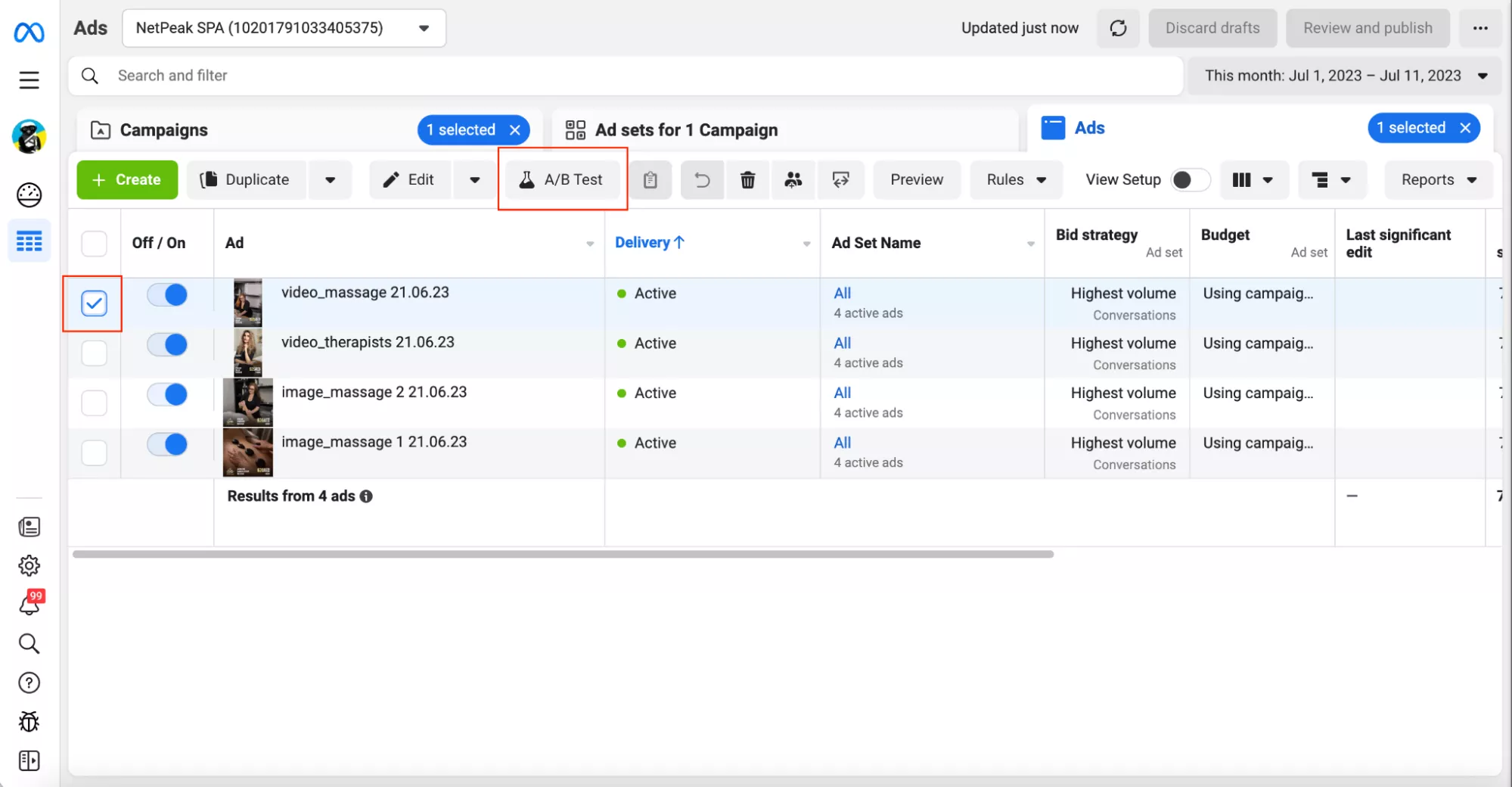

2. Select the checkbox to the left of the campaigns or ads you want to use for the A/B test. Click «A/B test» in the top toolbar.

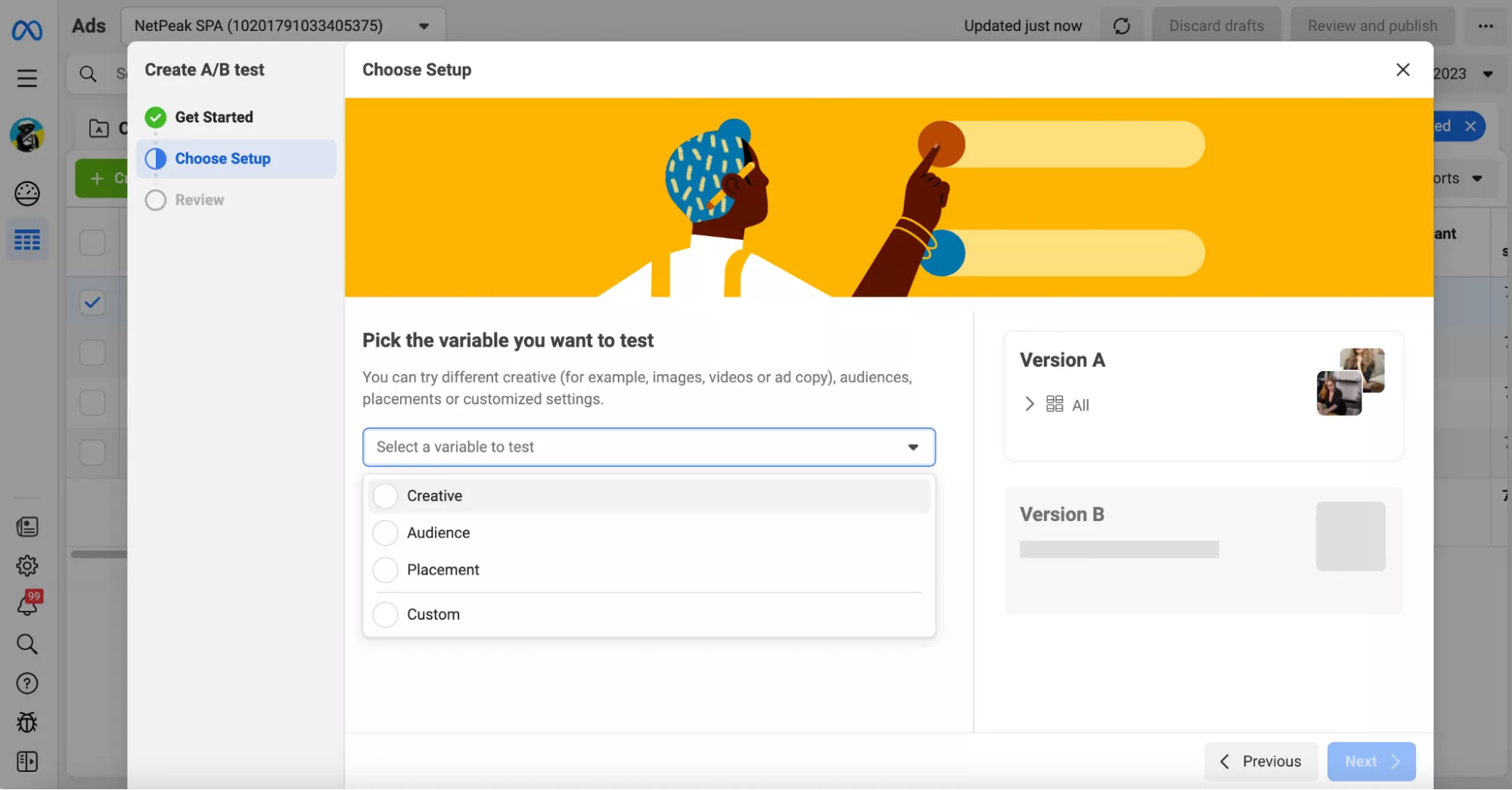

3. Select an available variable: Creative, Audience, Placement, or Custom. The first variable, Creative, allows you to change the ad, and the others allow you to make changes to a group (set) of ads.

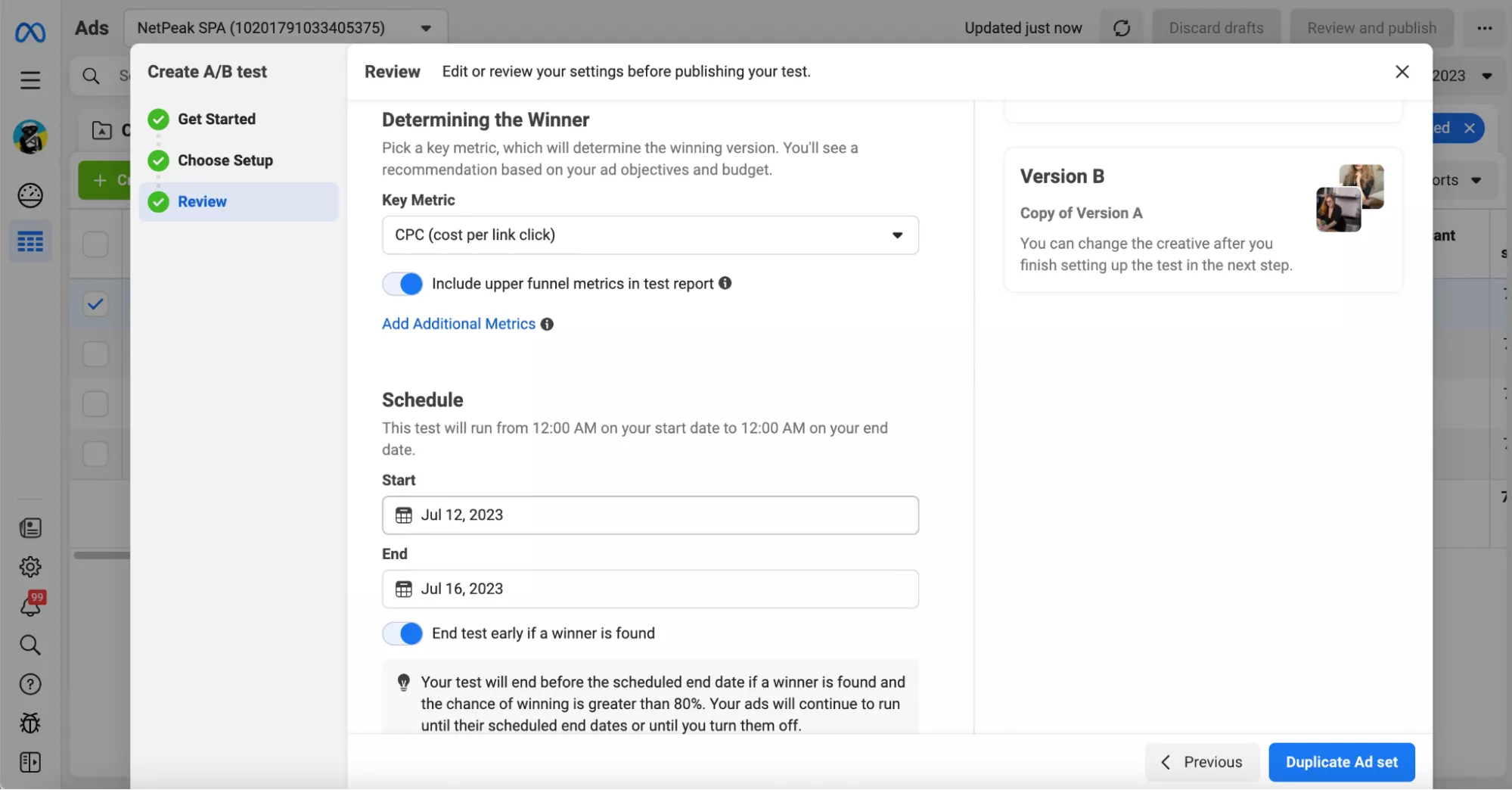

4. Choose a name, a performance metric, and start and end dates for your test. You can also set a condition for the test to be disabled if the results are detected earlier.

Your A/B test is created! Now you can make the desired changes to the duplicate ad or ad set and publish it. After the test is completed, all results will be available in Experiments.

However, there are other ways to create an A/B test.

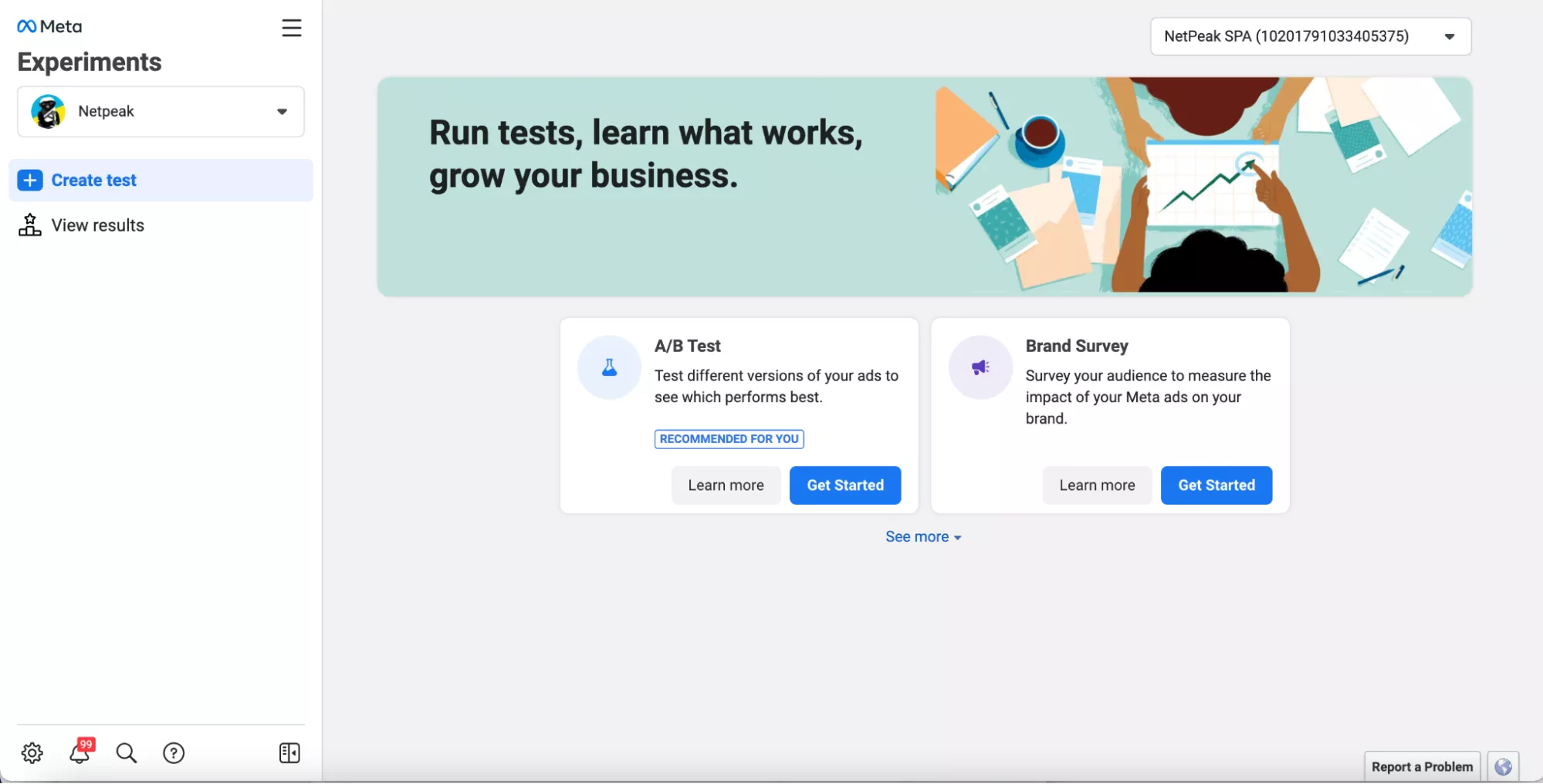

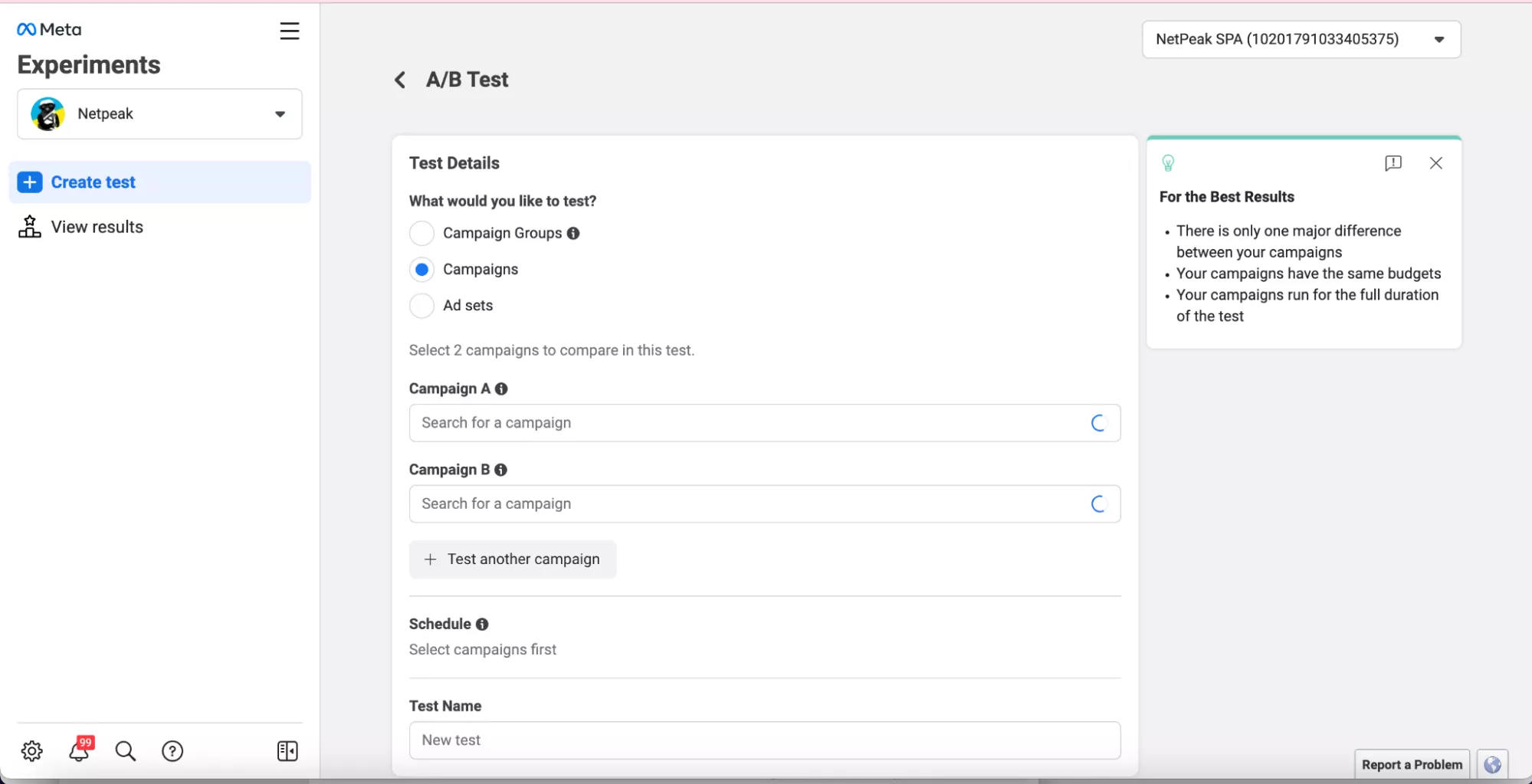

- Directly from the Experiments tool—you can create or duplicate ad campaigns to compare them and determine the winning strategy.

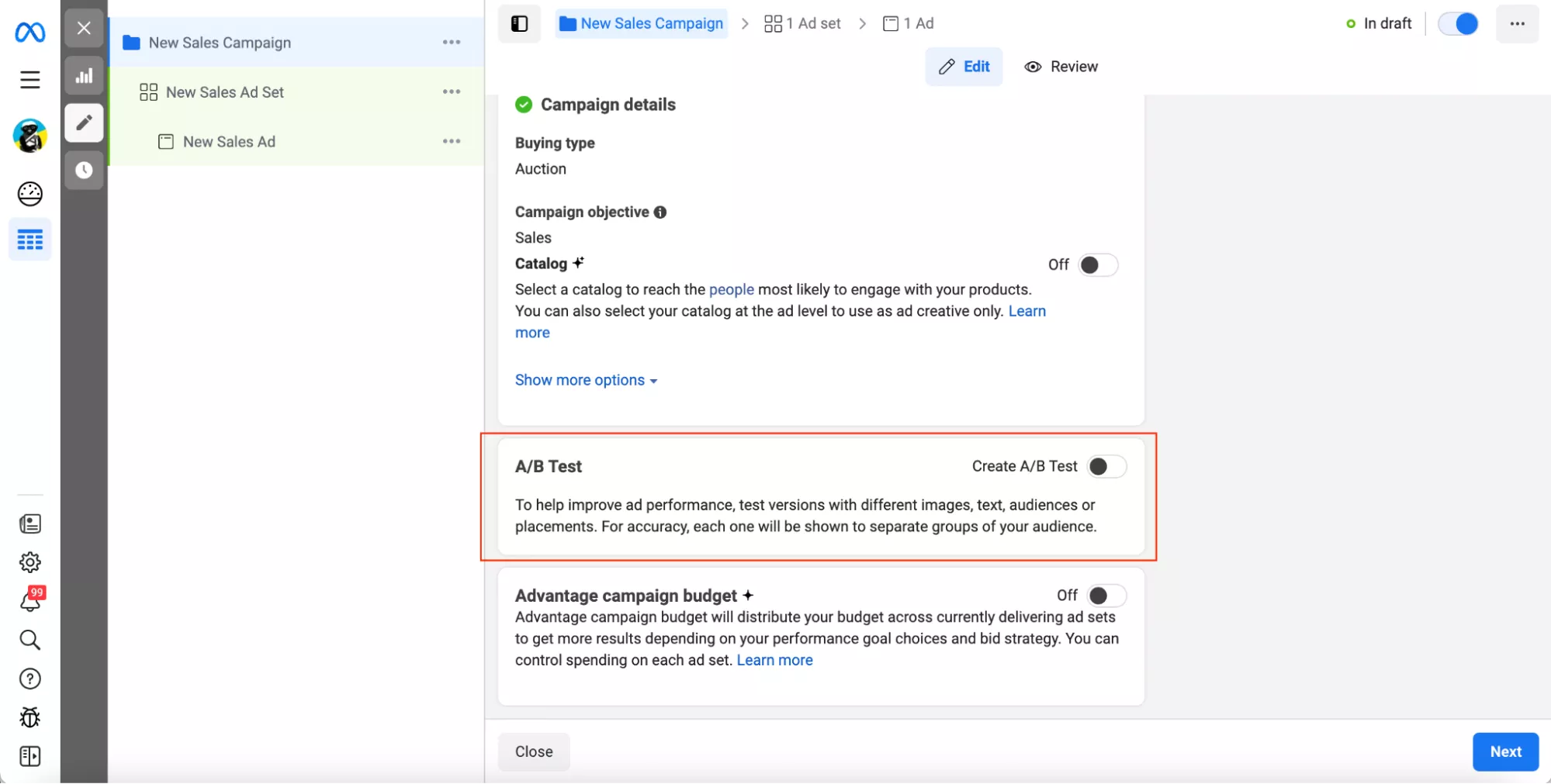

- Choose A/B testing when creating a new ad campaign.

Read more about setting up advertising campaigns in our blog:

- Case Study: Facebook Digital Campaign for the Online Marketplace's Mobile App

- Facebook Worldwide Targeting Trap

- The 2023 Guide to Posting Perfect Facebook Carousel Ads

Conclusions

- A/B testing is a useful practice that should be implemented in Facebook ad strategies. Testing will allow you to consistently improve your ads and achieve better the required results.

- A/B testing allows you to objectively evaluate different ad variants. With the help of clearly defined success metrics, you can identify the exact changes that are most impactful for achieving your business goals.

- Testing also enables you to collect data on the behaviors and preferences of your target audience. This can help you improve your ad strategy in the future.

- A/B testing can be challenging because you need to have a large enough sample to produce statistically valid results. It is also necessary to take into account all other factors that may affect ad performance, such as competition, timing, and placement.

Related Articles

How to Set Up Consent Mode in GA4 on Your Website with Google Tag Manager

Let's explore how to properly integrate consent mode in GA4, configure it for effective data collection, and at the same time comply with GDPR and other legal regulations

Display Advertising Effectiveness Analysis: A Comprehensive Approach to Measuring Its Impact

In this article, I will explain why you shouldn’t underestimate display advertising and how to analyze its impact using Google Analytics 4

Generative Engine Optimization: What Businesses Get From Ranking in SearchGPT

Companies that master SearchGPT SEO and generative engine optimization will capture high-intent traffic from users seeking direct, authoritative answers